It’s one of those technologies that many have only had cursory awareness of. It is certainly not a ‘mainstream’ technology in comparison to IP, Ethernet or even Fibre Channel. Those who have awareness of it know Infiniband as a high performance compute clustering technology that is typically used for very short interconnects within the Data Center. While this is true, it’s uses and capacity have been expanded into many areas that were once thought to be out of its realm. In addition, many of the distance limitations that have prevented it’s expanded use are being extended. In some instances to rather amazing distances that rival the more Internet oriented networking technologies. This article will look closely at this networking technology from both historical and evolutionary perspectives. We will also look at some of the unique solutions that are offered by its use.

Not your mother’s Infiniband

The InfiniBand (IB) specification defines the methods & architecture of the interconnect that establishes the interconnection of the I/O subsystems of next generation of servers, otherwise known as compute clustering. The architecture is based on a serial, switched fabric that currently defines link bandwidths between 2.5 and 120 Gbits/sec. It effectively resolves the scalability, expandability, and fault tolerance limitations of the shared bus architecture through the use of switches and routers in the construction of its fabric. In essence, it was created as a bus extension technology to supplant the aging PCI specification.

The protocol is defined as a very thin set of zero copy functions when compared to thicker protocol implementations such as TCP/IP. The figure below illustrates a comparison of the two stacks.

Figure 1. A comparison of TCP/IP and Infiniband Protocols

Note that IB is focused on providing a very specific type of interconnect over a very high reliability line of fairly short distance. In contrast, TCP/IP is intended to support almost any use case over any variety of line quality for undefined distances. In other words, TCP/IP provides robustness for the protocol to work under widely varying conditions. But with this robustness comes overhead. Infiniband instead optimizes the stack to allow for something known as RDMA or Remote Direct Memory Access. RDMA is basically the extension of the direct memory access (DMA) from the memory of one computer into that of another (via READ/WRITE) without involving the server’s operating system. This permits a very high throughput, low latency interconnect which is of particular use to massively parallel compute cluster arrangements. We will return to RDMA and its use a little later.

The figure below shows a typical IB cluster. Note that both the servers and storage are assumed to be relative peers on the network. There are differentiations in the network connections however. HCA’s (Host Channel Adapters) refer to the adapters and drivers to support host server platforms. TCA’s (Target Channel Adapters) refer to the I/O subsystem components such as RAID or MAID disk subsystems.

Figure 2. An example Infiniband Network

At its most basic form the IB specification defines the interconnect as (Point-to-Point) 2.5 GHz differential pairs (signaling rate)- one transmit and one receive (full duplex) – using LVDS and 8B/10B encoding. This single channel interconnect delivers 2.5 Gb/s. This is referred to as a 2X interconnect. The specification also allows for the bonding of these single channels into aggregate interconnects to yield higher bandwidths. 4X defines a interface with 8 differential pairs (4 per direction). The same for Fiber, 4 Transmit, 4 Receive, whereas 12X defines an interface with 24 differential pairs (12 per direction). The same for Fiber, 12 Transmit, 12 Receive. The table below illustrates various characteristics of the various channel classes including usable data rates.

Table 1.

Also note that the technology is not standing still. The graph below illustrates the evolution of the IB interface over time.

Figure 3. Graph illustrating the bandwidth evolution of IB

As the topology above in figure 2 shows however, the effective distance of the technology is limited to single data centers. The table below provides some reference to the distance limitations of the various protocols used in the data center environment including IB.

Table 2.

Note that while none of the other technologies extend much further from a simplex link perspective, they do have well established methods of transport that can extend them beyond the data center and even the campus.

This lack of extensibility is changing for Infiniband however. There are products that can extend its supportable link distance to tens, if not hundreds of Kilometers, distances which rival well established WAN interconnects. New products also allow for the inter-connection of IB to the other well established data center protocols, Fibre Channel and Ethernet. These new developments are expanding its potential topology thereby providing the evolutionary framework for IB to become an effective networking tool for next generation Business Continuity and Site Resiliency solutions. In figure 4 below, if we compare the relative bandwidth capacities of IB with Ethernet and Fibre Channel we find a drastic difference in effective bandwidth both presently and in the future.

Figure 4. A relative bandwidth comparison of various Data Center protocols

Virtual I/O

With a very high bandwidth low latency connection it becomes very desirable to use the interconnect for more than one purpose. Because of the ultra-thin profile of the Infiniband stack, it can easily accommodate various protocols within virtual interfaces (VI) that serve different roles. As the figure below illustrates, a host could connect virtually to its data storage resources over iSCSI (via iSER) or native SCSI (via SRP). In addition it could run its host IP stack as a virtual interface as well. This capacity to provide a low overhead high bandwidth link that can support various virtual interfaces (VI) lends it well to interface consolidation within the data center environment. As we shall see however, in combination with the recent developments in extensibility, IB is becoming increasingly useful for a cloud site resiliency model.

Figure 5. Virtual Interfaces supporting different protocols

Infiniband for Storage Networking

One of the primary uses for Data Center interconnects is to attach server resources to data storage subsystems. Original direct storage systems were connected to server resources via internal busses (i.e. PCI) or over very short SCSI (Small Computer Serial Interface) connections, known as Direct Access Storage (DAS). This interface is at the heart of most storage networking standards and defines the internal behaviors of these protocols for hosts (initiators) to I/O device (targets). An example for our purposes is a host writing data to or reading data from a storage subsystem.

Infiniband has multiple models for supporting SCSI (including iSCSI). The figure below illustrates two of the block storage protocols used, SRP and iSER.

Figure 6. Two IB block storage protocols

SRP (SCSI RDMA Protocol) is a protocol that allows remote command access to a SCSI device. The use of RDMA avoids the overhead and latency of TCP/IP and because it allows for direct RDMA write/read is a zero copy function. SRP never made it into a formal standard. Defined by ANSI T10, the latest draft is rev. 16a (6/3/02).

iSER (iSCSI Extensions for RDMA) is a protocol model defined by the IETF that maps the iSCSI protocol directly over RDMA and is part of the ‘Data Mover’ architecture. As such, iSCSI management infrastructures can be leveraged. While most say that SRP is easier to implement than iSER, iSER provides enhanced end to end management via iSCSI management. Both protocol models, to effectively support RDMA, possess a peculiar function that results in all RDMA being directed towards the initiator. As such, a SCSI read request would translate into an RDMA write command from the target to the initiator; whereas a SCSI write request would translate into an RDMA read from the target to the initiator. As a result some of the functional requirements for the I/O process shift to the target and provides offload to the initiator or host. While this might seem strange, if one thinks about what RDMA is it only makes sense to leverage the direct memory access of the host. This is results in a very efficient leverage of Infiniband for use in data storage.

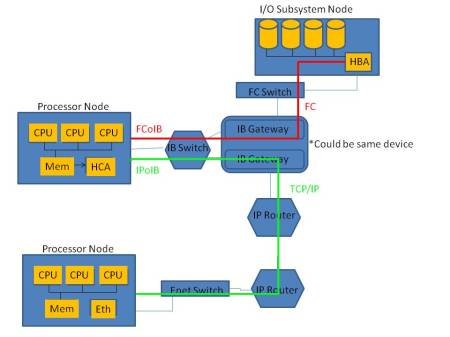

Another iteration of a storage networking protocol over IB is Fibre Channel (FCoIB). In this instance, the SCSI protocol is embedded into the Fibre Channel interface, which is in turn run as a virtual interface inside of IB. Hence, unlike iSER and SRP, FCoIB does not leverage RDMA but runs the Fibre Channel protocol as an additional functional overhead. FCoIB does however provide the ability to incorporate existing Fibre Channel SAN’s into an Infiniband network. The figure below illustrates a network that is supporting both iSER and FCoIB, with a Fibre Channel SAN attached by a gateway that provides interface between IB and FC environments.

Figure 7. An IB host supporting both FC & native IB interconnects

As can be seen, a legacy FC SAN can be effectively used in the overall systems network. Add to this high availability and you have a solid solution for a hybrid migration path.

If we stop and think about it, data storage is number two only to compute clustering for an ideal usage model for Infiniband. Even with this, the use of IB as a SAN is a much more real world usage model for the standard IT organization. Not many IT groups are doing advanced compute clustering and those that do already know the benefits of IB.

Infiniband & Site Resiliency

Given the standard offered distances of IB, it is little wonder that it has not been often entertained for use in site resiliency. This however, is another area that is changing for Infiniband. There are now technologies available that can extend the distance limitation out to hundreds of kilometers and still provide the native IB protocol end to end. In order to understand the technology we must first understand the inner mechanics of IB.

The figure below shows a comparison between IB and TCP/IP reliable connection. The TCP/IP connection shows a typical saw tooth profile which is the normal result of the working mechanics of the TCP sliding window. The window starts at a nominal size for the connection and gradually increases in size (i.e. Bytes transmitted) until a congestion event is encountered. Depending on the severity of the event the window could slide all the way back to the nominal starting size. The reason for this behavior is that TCP reliable connections were developed in a time when most long distance links were far more unreliable and of less quality.

Figure 8. A comparison of the throughput profiles of Infiniband & TCP/IP

If we take a look at the Infiniband throughput profile we find that the saw tooth pattern is replaced by a square profile that is the result of the transmission instantly going to 100% of the offered capacity and maintains as such until a similar event occurs which results in a halt to the transfer. Then after a period of time, it resumes as 100% of the offered capacity. This similar event is something termed as buffer starvation. Where the sending Channel Adapter has exhausted its available buffer credits which are calculated by the available resources and the bandwidth of the interconnect (i.e. 2X, 4X, etc.). Note that the calculation does not include any significant concept of latency. As we covered earlier, Infiniband was originally intended for very short highly dependable interconnects so the variable of transmission latency is so slight that it can effectively be ignored within the data center. As a result the relationship of buffer credits to available resources and offered channel capacity resulted in a very high throughput interconnect that seldom ran short of transmit buffer credits. Provided things were close.

As distance is extended things become more complex. This is best realized in the familiar bucket analogy. If I sit on one end of a three foot ribbon and you sit on the other end and I have a bucket full of bananas (which are analogous to the data in the transmit queue) where as you have a bucket that is empty (analogous to your receive queue) we can run the analogy. As I pass you the bananas , there is only a short distance which can allow for a direct hand off of the bananas. Remembering that this is RDMA, I pass you the bananas at a very fast predetermined speed (the speed of the offered channel) and you take them just as fast. At the end of passing you the bananas, you pass me a quarter to acknowledge the fact that the bananas have been received (this is analogous to the completion queue element shown in figure 1). Now imagine that there is someone standing next to me who is providing me bananas at a predetermined rate (this is the available processing speed of the system). Also, he will only start to fill my bucket if the following two conditions exist. 1). my bucket is empty and, 2). I give him the quarter for the last bucket. Obviously the time required end to end will impact that rate. If that resulting rate is equal to the offered channel, we will never run out of bananas and you and I will be very tired. If that rate is less than the offered channel speed then at some point I will run out of bananas. At that point I will need to wait until my bucket is full before I begin passing them to you again. This is buffer starvation. Now in a local scenario, we see that the main tuning parameters are a). the size of our buckets (available memory resources for RDMA) and, b). the rate of the individual placing bananas into my bucket (the system speed). If these parameters are tuned correctly, the connection will be of very high performance. (You and I will move a heck of a lot of bananas). The further we are from that optimal set of parameters, the lower the performance profile will be and an improperly tuned system will perform dismally.

Now let’s take that ribbon and extend it to twelve feet. As we watch the following scenario unfold it becomes obvious as to why buffer starvation limits distance. Normally, I would toss you a banana and wait for you to catch it. Then I would toss you another one. If you missed one and had to go pick it up off of the ground (the bruised banana is a transmission or reception error), I would wait until you were ready to catch another one. This in reality is closer to TCP/IP. With RDMA, I toss you the bananas just as if you were sitting next to me. What results is a flurry of bananas in the air all of which you catch successfully because hey – your good. (In reality, it is because we are assuming a high quality interconnect) After I fling the bananas however, I need to wait to receive my quarter and until my bucket is in turn refilled. At twelve feet if nothing else changes – we will be forced to pause far more often as my bucket refills. If we move to twenty feet the situation gets even more skewed. We can tune certain things like the depth of our buckets or the speed of the replenishment but these get to be unrealistic as we stretch the distance farther and farther. This is what in essence has kept Infiniband inside the data center.*

*Note that the analogy is not totally accurate with the technical issues but it is close enough to give you a feel of the issues at hand.

Now what would happen if I were to put some folks in between us who had reserve buckets for bananas I send to you and you were to do the same for bananas you in turn send to me? Also, unlike the individual who fills my bucket who deals with other intensive tasks such as banana origination (the upper system and application), this person is dedicated one hundred percent to the purpose of relaying bananas. Add to this the fact that this individual has enough quarters to give me for twice the size of his bucket, and yours in turn as well. If we give them nice deep buckets we can see a scenario that would unfold as follows.

I would wait until my bucket was full then I would begin to hand off my bananas to the person in front of me. If this individual were three feet from me I could hand them off directly as I did with you originally. Better than that, I could simply place the bananas in their bucket and they would give me quarter each time I emptied mine. The process repeats until their bucket is full. They then can begin throwing the bananas to you. While we are at it, why should they toss directly to you? Let’s put another individual in front of you that is also completely focused. But instead of being focused on tossing bananas, they would be focused on catching them. Now if these person’s buckets are roughly 4 times the size of yours and mine, and the relayed bananas occurred over six feet out to your receiver at the same rate as being handed by me, we in theory should never run out of bananas. There would be an initial period of the channel filling and the use of credit but after that initial period the channel could operate at optimal speed with the initial offset in reserve buffer credits being related to the distance or latency of the interconnect. The reason for the channel fill is that the person has to wait until their bucket is full before they can begin tossing, but importantly, after that initial fill point they will continue to toss bananas as long as there are some in the bucket. In essence, I always have an open channel for placing bananas and I always get paid and can in turn pay the guy who fills my bucket only on the conditions mentioned earlier.

This buffering characteristic has led to a new class of devices that can provide significant extension to the distance offered by Infiniband. Some of the latest systems can provide buffer credits equivalent to one full second, which is A LOT of time at modern networking speeds. If we add these new devices and another switch to the topology shown earlier we can begin to realize some very big distances that become very attractive for real time active-active site resiliency.

Figure 9. An extended Infiniband Network

As a case point, the figure above shows an Infiniband network that is extended out to support data centers that are 20Km in distance. The systems at each end, using RDMA are effectively regarding each other as local and for all intensive purposes in the same data center. This means that there are versions of fault tolerance and active to active high availability that otherwise would be out of the question, that are now quite feasible to design and work in practice. A common virtualized pool of storage resources using iSER allow for seamless treatment of data and bring a reduced degree of fault dependency between the server and storage systems. Either side could experience failure at either the server or storage system level and still be resilient. Adding further systems redundancy for both servers and storage locally on each side provides further resiliency as well as provide for off line background manipulation of the data footprint for replication, testing, etc.

Figure 10. A Hybrid Infiniband network

In order for any interface consolidation effort to work in the data center the virtual interface solution must provide for a method of connectivity to other forms of networking technology. After all, what good is an IP stack that can only communicate within the IB cluster? A new generation of gateway products provide for this option. As shown in the figure above, gateway products exist that can tie IB to both Ethernet and Fibre Channel topologies. This allows for the ability to consolidate data center interfaces and still provide for general internet IP access as well as connectivity to traditional SAN topologies and resources such as Fibre Channel based storage arrays.

While it is clear that Infiniband is unlikely to become a mainstream networking technology, it is also clear that there are many merits to the technology that have kept it alive and provided enough motivation (i.e. market) for its evolution into a more mature architectural component. With the advent of higher speed Ethernet and FCoE as well as the current development of lower latency profiles for DC Ethernet, the longer range future of Infiniband may be similar to that of Token Ring or FDDI. On the other hand, even with these developments, the technology may be more likened to ATM. Which, while being far from mainstream, is still being used extensively in certain areas. If one has the convenience of waiting for these trends to sort themselves out then moving to Infiniband in the Data Center may be premature. However, if you are one of the many IT architects that are faced with intense low latency performance requirements that need to be addressed today and not some time in the future, IB may be the right technology choice for you. It has been implemented by enough organizations that best practices are fairly well defined. It has matured enough to provide for extended connectivity outside of the glass house and gateway technologies are now in place that can provide connectivity out into other more traditional forms of networking technology. Infiniband may never set the world on fire, but it has the potential to put out fires that are currently burning in certain high performance application and data center environments.

February 20, 2010 at 2:32 am |

Hey Ed,

Nice article. I have worked on IB when I was in Topspin. Good old days!

Regards,

Nikhil

February 21, 2010 at 7:49 pm |

Thanks Nikhil!

April 8, 2010 at 6:39 am |

Great article! I think your conclusions are on the money 🙂

April 8, 2010 at 2:19 pm |

Thanks Adin!

June 9, 2012 at 10:25 am |

[…] Very well documented overview of InfiniBand technology I’ve found here. This entry was posted in hardware by tomcio. Bookmark the […]

June 20, 2012 at 6:35 pm |

Thank you.

July 3, 2012 at 5:51 pm |

I am leaning more and more towards 10 GB (and on up) Ethernet as the preferred solution. Granted, there are some things that Infiniband will be superior in, such as RDMA for some time to come.

But from what I have seen some recent testing of RoCEE (RDMA over Converged Enhanced Ethernet) are promising.

July 19, 2016 at 8:39 am |

Simply amazing article! I also can be helpful here 🙂 BTW, if anyone needs to fill out a PD F 1048 E, I found a blank fillable form here

https://goo.gl/tsMXyW.